As I was performance tuning a query, I found that a number of date calculation functions in SQL Server appear to be forcing a conversion of their date parameters to a specific datatype, adding computational work to a query that uses them. In programming terms, it seems that these functions do not have “overloads”, i.e. different code paths depending on the incoming datatype.

So let’s take a closer look at how this manifests itself.

What’s going on here?

Executing a query with a DATEDIFF() in a SQL Server query, I found that SQL Server adds an implicit datatype conversion in order to be able to process the input values of the function.

Consider the following example, where we have two date columns in a table, and we want to calculate the difference between them:

DECLARE @t TABLE (

a date NOT NULL,

b date NOT NULL

);

SELECT DATEDIFF(month, a, b)

FROM @t;

Here’s what the execution plan looks like:

But if you look closer at the “Compute Scalar”, it does a bit more than just the DATEDIFF() calculation.

datediff(month,CONVERT_IMPLICIT(datetimeoffset(7),[a],0),CONVERT_IMPLICIT(datetimeoffset(7),[b],0))

Note how it converts the two date columns, a and b, to datetimeoffset(7) using a CONVERT_IMPLICIT() before performing the DATEDIFF().

For the query I was trying to tune, I tried changing the datatype in my base table from datetime2(0) to datetimeoffset(0). This removed the implicit conversion from the plan, and my query ran about 20% faster.

What does the documentation say?

The SQL Server documentation states that the date input parameters for DATEDIFF() should be:

An expression that can resolve to one of the following values:

- date

- datetime

- datetimeoffset

- datetime2

- smalldatetime

- time

Testing

Because I’m inherently lazy (I believe the technical term is “result oriented”), I wrote a script to do the heavy lifting for me. If you’re curious, or if you want to replicate the results in your own environment, here’s the code.

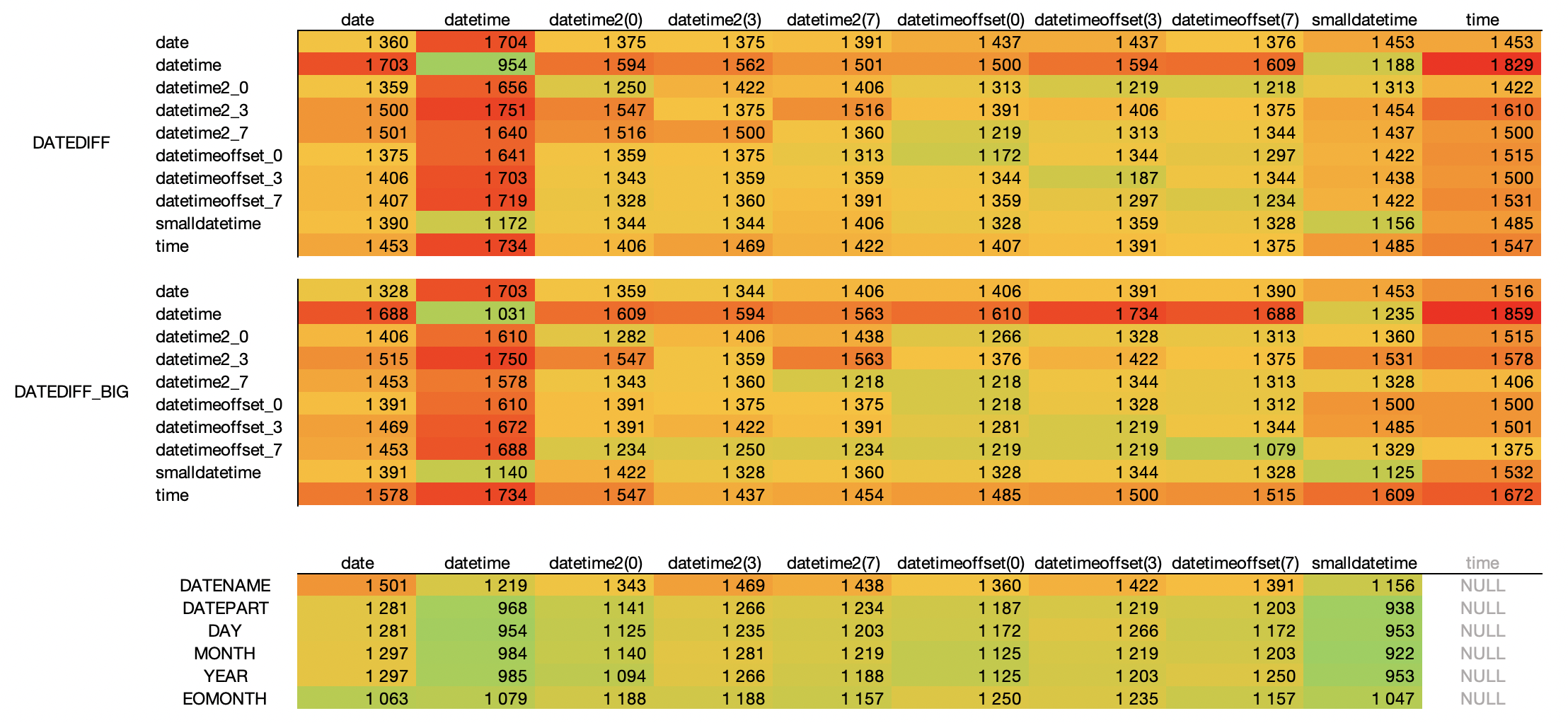

Execution times vary by almost a factor of two between some datatypes:

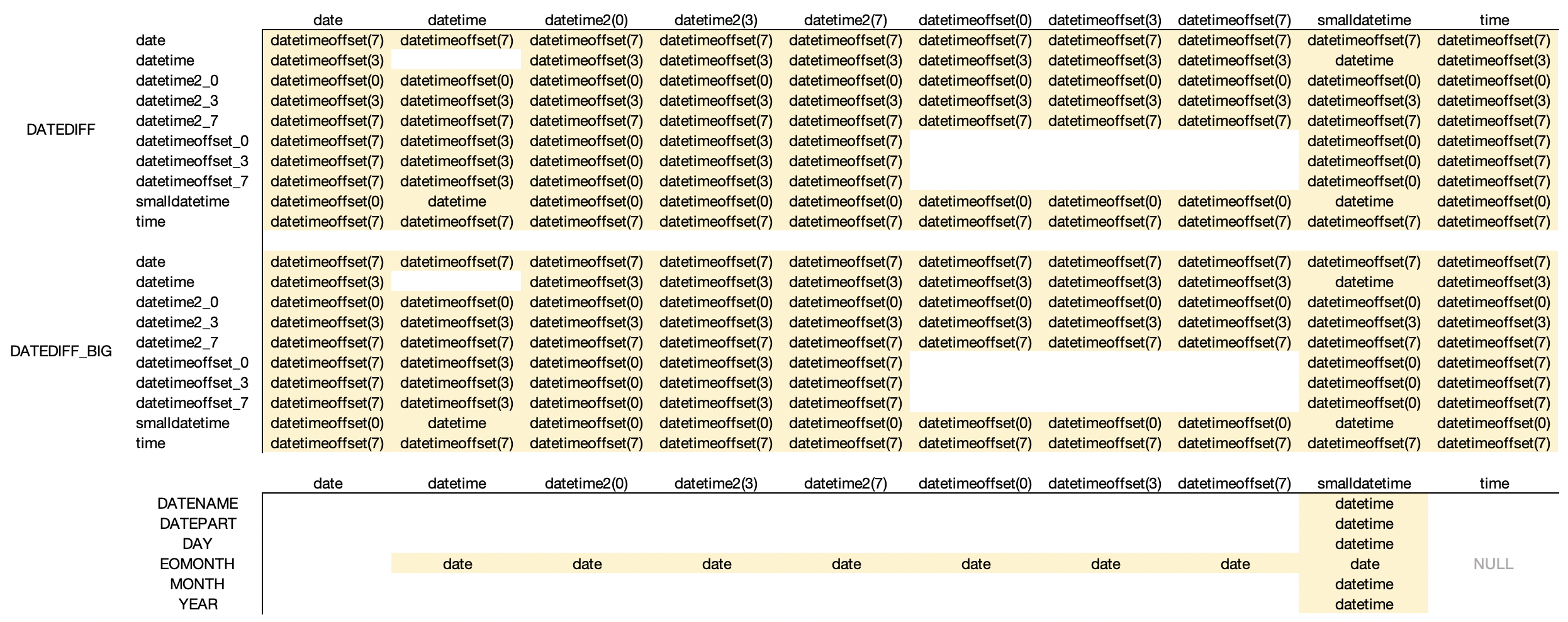

And here are the cases where I found implicit conversions in the execution plan:

Method

These results were compiled on SQL Server 2019 CU16, with single-threaded queries, and discarded output. Note that the timings are the duration, not the CPU time, so it is subject to background noise, even though the server wasn’t actually running any other workload at the time. The entire table was in the buffer pool and IO was negligible as far as I could tell. When I re-ran the tests, it consistently came in within 2% of my other attempts.

Conclusion

A couple of observations.

- DATEDIFF() and DATEDIFF_BIG() appear to perform relatively similar in this comparison. You would obviously expect a tiny overhead because the DATEDIFF_BIG() is twice as wide the that of DATEDIFF().

- DAY(), MONTH() and YEAR() are internally interpreted as their DATEPART() counterparts, which can be seen in the execution plan properties as well. No surprises.

- EOMONTH(), as opposed to all the other functions, always requires a “date” parameter. This is also clear from the documentation.

- For most other date functions, like DATEPART and DATENAME, there’s no implicit conversion (except from smalldatetime to datetime).

DATEDIFF() and DATEDIFF_BIG() use datetimeoffset internally*

Looking at the implicit conversion results, it appears that DATEDIFF() and DATEDIFF_BIG() do their thing in datetimeoffset. For that to happen, SQL Server needs to add an implicit conversion to datetimeoffset for values that are not already in that type.

But there appears to be an exception for datetime and smalldatetime values, as those specific types can be used directly.

None of these two behaviours are evident in the documentation, which gives the impression that the functions will accept any valid date/time datatype.

datetime and smalldatetime are really quick

This is one thing that really stood out to me.

In every category, datetime and smalldatetime are clear performance winners (as long all the arguments are datetime or smalldatetime).

The undisputed king of the hill is the datetime-to-datetime DATEDIFF – at about 1000 ms, it is significantly faster than a date-to-date comparison, which comes in at 30% more time. This is consistent across all of the functions I tested, not just DATEDIFF.

That said, comparing a datetime to anything but another datetime or smalldatetime will cost you anywhere up to 70% more execution time, because it once again forces an implicit conversion to datetimeoffset.

datetime and smalldatetime are not recommended for new development

Use the time, date, datetime2 and datetimeoffset data types for new work. These types align with the SQL Standard. They are more portable. time, datetime2 and datetimeoffset provide more seconds precision. datetimeoffset provides time zone support for globally deployed applications.

Make of that what you will. I probably wouldn’t design a database with datetime today, but keeping the above in mind, you might still be able to trim off a couple of milliseconds with some smart datatype choices.

3 comments