The subject of parallel execution of SQL Server queries is at times somewhat shrouded in mystery and uncertainty. Since the concept of parallel execution is such a significant (and indispensable tool) for performance tuning, it’s good to have a fair idea of how it works. In this first post in a series on parallelization, I’m going to try to sort out the apples from the pears, or the serial from the parallel if you will.

Example of a parallel query

Some database operations can be performed in parallel, which means that you can split the workload between two or more processor threads. Consider the following query on an example table:

SELECT AVG(a) FROM dbo.someTable;

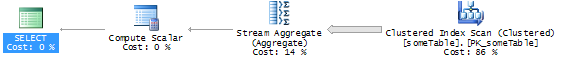

In its simplest form, this query will generate a serial plan (the opposite of a parallel plan), meaning it will use only a single processor thread. The execution plan will look something like this:

Reading the plan, right-to-left, you can see the following happening: The clustered index scan operator goes through each and every row of the table, and hands those rows to the Stream aggregate operator. The Stream aggregate is an extremely efficient so-called non-blocking operation, because it can process the data stream row-by-row without building up a buffer that takes up memory or spills to disk. In this example, Stream aggregate builds two working variables, let’s call them @count and @total. For each input row that passes the aggregate operator, @count is incremented by 1 and @total is incremented by the value “a” from the current row. Once all the table’s rows have gone into the Stream aggregate operator, it passes @count and @total to the Compute scalar operator, which performs a simple arithmetic operation, dividing @total by @count in order to arrive at the average “a” of the table’s rows.

At “compile time”, i.e. when SQL Server is deciding on an execution plan for the query, it will consider building a parallel plan if the workload ahead seems large enough and the system has enough free resources (available threads, among other things) to do so. The reason every plan isn’t automatically parallel is that a parallel plan introduces some overhead of its own, in the form of parallelism operators. This means that a very simple query could in fact run slower if you were to force it to run parallel.

Here’s how the same example query would look if it ran parallel. Note the yellow “parallelization” arrows in the lower-right corner of the those operators that are parallel:

The same query now has an extra set of operators, and here’s how it breaks down. I’m going to assume for this example that SQL Server has allocated four threads to the query. At the very start of the query, with the Clustered index scan operator, SQL Server allocates four simultaneously running threads to read about a quarter of the table’s rows each. Every thread then sends its rows to its respective Stream aggregate operator (there are four Clustered index scans and four Stream aggregates). Each Stream aggregate operator then aggregates the @count and @total values for its own stream. The results from those four streams are then collected by an operator called Parallelism, in this case a Gather streams operator. In the case of the example above, the Stream aggregate operators will return a single row each, so the Gather streams operator will UNION ALL those four rows into a single four-row stream, effectively turning the remainder of the plan into a serial segment, as it’s known. From here on, another Stream aggregate finishes the job by aggregating those four rows, calculating SUM(@count) and SUM(@total), and hands those grand totals over to the scalar operator that divides SUM(@total)/SUM(@count).

The result is the same, but when you can use four processor threads to cooperate on the heavy lifting, you can utilize the server’s resources more efficiently and get better query performance.

Serial and parallel segments

A query plan can consist of a number of segments that can each be either serial or parallel. The reason for this is that a lot of operations cannot be performed in parallel – SQL Server may not support parallel processing for the operation, or it may simply not make logical sense for it to be parallel at all. Each segment starts or ends with a Parallelism operator (except when the branch starts with a parallel operator, like the Clustered index scan in the example). There are three types that you will encounter in a query plan.

![]() Parallelism (Distribute Streams) marks the start of a parallel segment by splitting a serial stream of data into multiple, parallel streams. This happens when the plan transitions from a serial segment into a parallel segment. How the distribution of rows happens is technical beyond the scope of this post, but there are a few different methods SQL Server can choose between. The aim, however, is always to distribute the rows evenly while maintaining some type of logical partitioning so the subsequent parallel operators can still do their jobs.

Parallelism (Distribute Streams) marks the start of a parallel segment by splitting a serial stream of data into multiple, parallel streams. This happens when the plan transitions from a serial segment into a parallel segment. How the distribution of rows happens is technical beyond the scope of this post, but there are a few different methods SQL Server can choose between. The aim, however, is always to distribute the rows evenly while maintaining some type of logical partitioning so the subsequent parallel operators can still do their jobs.

![]() Parallelism (Gather Streams), like the name implies, merges a number of parallel streams into a single, serial stream, marking the transition from a parallel segment into a serial segment.

Parallelism (Gather Streams), like the name implies, merges a number of parallel streams into a single, serial stream, marking the transition from a parallel segment into a serial segment.

![]() Parallelism (Repartition Streams) swaps rows between different streams in order to optimize the query for what’s ahead. This happens when the plan transitions from one parallel segment to another and can happen for a number of reasons. For instance, different streams may have become unbalanced (which would severely reduce the efficiency of the parallel operation) or the streams have to be re-distributed in a different way in order to suit subsequent operators.

Parallelism (Repartition Streams) swaps rows between different streams in order to optimize the query for what’s ahead. This happens when the plan transitions from one parallel segment to another and can happen for a number of reasons. For instance, different streams may have become unbalanced (which would severely reduce the efficiency of the parallel operation) or the streams have to be re-distributed in a different way in order to suit subsequent operators.

Next week, we’ll look at different ways to prevent, force and control parallelism on SQL Server. Stay tuned!

3 comments