There are obvious advantages as well as challenges to schemabinding things, and whether you do or don’t is often a matter of preference. However, in some cases, you need schemabinding; when you’re designing indexed views, and to optimize user-defined functions. Here’s why:

Schemabinding

A user-defined function (UDF for short), like any T-SQL code, is dependent on any database objects it uses (i.e. references). Schemabinding a function, a procedure or a view will “tie” that code in as a dependency of those objects, so you can’t make any changes to them. This protects your code, because changes in the referenced objects could break your code; say, for instance, if you add or remove a column from a table, change a datatype, etc.

The very strength of schemabinding things is also what causes problems – changing the datatype of a single column from, say numeric(18, 2) to numeric(18, 4) will require you to potentially drop and recreate (or alter) a whole chain of views, triggers, etc in the correct order, and then recreate them all after the change.

Obviously, a database using schemabinding makes for a more robust design, but it also makes changes to the schema a bit more difficult.

User data access, system data access

But there’s another aspect as well. When you schemabind an object, like the word implies, you force a validation of which other objects that you reference. The result of this validation will include whether your object makes use of other tables, views, etc, known as “user data” here. You can verify this by creating a schemabound dummy function, like this:

CREATE FUNCTION dbo.fn_double(@i int)

RETURNS int

WITH SCHEMABINDING

AS

BEGIN;

--- Twice as nice.

RETURN @i*2;

END;

This function doesn’t access any user data (tables, views, other functions) and it doesn’t make use of system data, catalogs, etc. To verify this, we can use the OBJECTPROPERTYEX function:

SELECT OBJECTPROPERTYEX(OBJECT_ID('dbo.fn_double'), 'USERDATAACCESS') AS usrData,

OBJECTPROPERTYEX(OBJECT_ID('dbo.fn_double'), 'SYSTEMDATAACCESS') AS sysData;

The function will return 0 for both properties. If you were to remove or comment out “WITH SCHEMABINDING”, these two properties would return 1, because the function hasn’t been validated – this doesn’t mean that the function actually uses user data, only that the server doesn’t know, because it hasn’t been verified.

Whether the function references “outside data” or not is important when the server constructs certain UPDATE, INSERT or DELETE execution plans where you make use of the function. This has to do with “halloween protection”.

Halloween protection

For a more in-depth discussion of halloween protection, read a previous article on the Spool operator. To recap, the “halloween problem” has nothing to do with trick-or-treating, but rather the fact that it was discovered on a november day many years ago. Simply put, it deals with what happens when you perform a change (like an UPDATE) on a set of data that could cause it to be rearranged in the process, so that half-way through, you don’t know which rows you’ve already updated, and which ones remain.

Whenever SQL Server spots a potential halloween problem, it’ll create a temporary working set using a hidden temp table, called a Spool.

One way to create a halloween problem is to update rows using a function that (possibly) uses data that could be affected by the update. Recall that when a user-defined function isn’t schemabound, SQL Server doesn’t know if it makes use of user data, whereas if the UDF is schemabound, it does.

Example

Here’s some example data, a simple table with a thousand integer values, that we can work with:

CREATE TABLE #numbers (

i int NOT NULL,

c int NULL,

PRIMARY KEY CLUSTERED (i)

);

SET NOCOUNT ON;

--- Fill a worker table with 1000 rows:

DECLARE @i int=1000;

WHILE (@i<2000) BEGIN;

INSERT INTO #numbers (i) VALUES (@i);

SET @i=@i+1;

END;

SET NOCOUNT OFF;

Now, with the schemabound function, let’s try setting the c column to twice the value of i:

UPDATE #numbers SET c=dbo.fn_double(i);

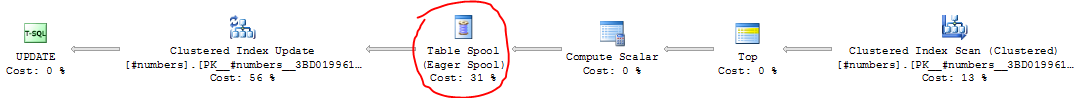

The resulting query plan, using a schemabound function, will look like this, where the “compute scalar” operator is where the actual calculation of the function happens and “clustered index update” is where the result of the operation is applied to the table:

![]()

Now, if you try to comment out “WITH SCHEMABINDING” from the function and retry the query, the function will look like this:

Instead of writing the results of the scalar calculation directly to the table, it’s first stored in a spool. Once the entire table has been processed and stored in the spool table, the results are applied back to the table. This is what makes the spool operator a blocking operator – the execution plan won’t continue until the spool operator has completed all of its work. This, of course, results in a performance penalty for your query, and that’s why, ideally, you would want to avoid a Spool where you can.

Summary

When you schemabind a user-defined function (UDF), SQL Server will look up and verify any referenced objects that the it depends on as part of the schemabinding. If there are none, the UDF will reflect this.

If a UDF does depend on user data (or might, because you haven’t schemabound it), SQL Server will apply halloween protection as a precaution by adding a spool operator to your query.

When your function does not depend on other data, make sure to schemabind it, in order to improve performance.

1 comment