It’s not entirely uncommon to want to group by a computed expression in an aggregation query. The trouble is, whenever you group by a computed expression, SQL Server considers the ordering of the data to be lost, and this will turn your buttery-smooth Stream Aggregate operation into a Hash Match (aggregate) or create a corrective Sort operation, both of which are blocking.

Is there anything we can do about this? Yes, sometimes, like when those computed expressions are YEAR() and MONTH(), there is. But you should probably get your nerd on for this one.

Consider the following table with accounting transactions, with a few million rows:

CREATE TABLE Finance.Transactions (

AccountID smallint NOT NULL,

AccountingDate date NOT NULL,

-- ... and a few other columns of lesser importance

Amount numeric(12, 2) NOT NULL,

-- This index actually shows as non-clustered in the

-- query plan images, but you get the picture. :)

CONSTRAINT IX_Finance_Transactions

PRIMARY KEY CLUSTERED

(AccountID, AccountingDate)

);

Say we want balances per account, per calendar month. That’s a simple enough query to write:

SELECT AccountID,

DATEFROMPARTS(

YEAR(AccountingDate),

MONTH(AccountingDate),

1) AS AccountingPeriod,

SUM(Amount) AS MonthlyChange

FROM Finance.Transactions

GROUP BY AccountID,

YEAR(AccountingDate),

MONTH(AccountingDate);

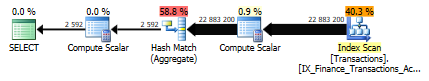

And here’s the query plan:

The problem here is with the Hash Match (aggregate) operator. Now, by “problem”, I mean that if the table is fairly large and your server instance is weak cheap, there will be potentially much memory grant and terrible spillage of disks.

I’ll admit, the memory grant was just a few MBs, and there was no spill to disk at all, but I’ll persist in my epic (and at first glance quite pointless) quest to eliminate blocking operators from this plan.

Just for the hell of it. Stay with me here, I’ll make it worth your while.

Right, where was I. I figure we’ll build this monster in layers. Our first task is to aggregate the data the best we can with the limitations at hand – i.e. not by year and month, but by accounting date. We’ll figure out the rest as we go along.

SELECT AccountID,

AccountingDate,

SUM(Amount) AS DailyTotal

FROM Finance.Transactions

GROUP BY AccountID, AccountingDate;

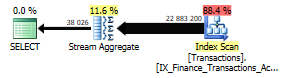

This is the simplest plan ever.

And let’s add a window aggregate to turn the DailyTotal column into running total over dates:

SELECT AccountID,

AccountingDate,

-- Running total by account over AccountingDate:

SUM(SUM(Amount)) OVER (

PARTITION BY AccountID

ORDER BY AccountingDate

) AS RunningTotal

FROM Finance.Transactions

GROUP BY AccountID, AccountingDate;

It’s more complex, but really not something we haven’t seen before. Because we’ve added a window function, we now have the usual collection of window operators, plus one more Stream Aggregate.

But now that we have running totals by AccountingDate, how can we turn them into monthly aggregates? By using the last running total of each month, and subtracting the running total of last month’s last date.

Let’s go ahead and create our own Segment operator of sorts. Using a window function (LEAD), we can peek ahead one row to see if this is the last TransactionDate of the month. If it is, we’ll keep it, otherwise, we’ll filter it out.

SELECT AccountID,

AccountingDate,

SUM(SUM(Amount)) OVER (

PARTITION BY AccountID

ORDER BY AccountingDate) AS RunningTotal,

-- Is this the same month as that of the next row?

(CASE WHEN DATEDIFF(month,

AccountingDate,

LEAD(AccountingDate, 1) OVER (

PARTITION BY AccountID

ORDER BY AccountingDate))=0

THEN 0 ELSE 1

END) AS IsLastDayOfMonth

FROM Finance.Transactions

GROUP BY AccountID, AccountingDate;

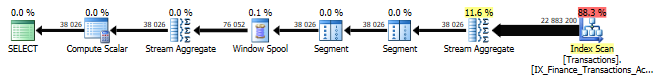

Things are starting to get messy in the query plan.

If it’s any consolation, there are still no blocking operators. Now, let’s filter out just the rows we want (the last row for each month), so there’s just a single row for each month, and subtract the previous month’s last running total.

We’ll just put our work so far in a common table expression and take it from there:

-- Common table expression (CTE) to package things up: WITH runningTotals AS ( SELECT AccountID, AccountingDate, SUM(SUM(Amount)) OVER ( PARTITION BY AccountID ORDER BY AccountingDate) AS RunningTotal, (CASE WHEN DATEDIFF(month, AccountingDate, LEAD(AccountingDate, 1) OVER ( PARTITION BY AccountID ORDER BY AccountingDate))=0 THEN 0 ELSE 1 END) AS IsLastDayOfMonth FROM Finance.Transactions GROUP BY AccountID, AccountingDate) SELECT AccountID, -- Pretty month format: DATEFROMPARTS( YEAR(AccountingDate), MONTH(AccountingDate), 1) AS AccountingMonth, -- This month's running total, minus -- that of last month is this month's -- change (i.e. the sum of Amount): RunningTotal-LAG(RunningTotal, 1, 0.0) OVER ( PARTITION BY AccountID ORDER BY AccountingDate) AS MonthlyTotal FROM runningTotals WHERE IsLastDayOfMonth=1;

And there it is. The plan looks like the endless tapeworm from a horror movie, but there’s no blocking operator. For one, that means it starts returning rows the second you click “Execute”. And it will scale linearly no matter how many rows you can throw at it.

Our refined (and slightly excentric) monster clocks in at 198 seconds on my dead-tired and very cheap Azure SQL Database instance. By comparison, that’s actually twice as fast as the original query. I get similar proportions on my 8-core VM, where the query goes parallel.

Performance tuning: 1. Windmills: 0

UPDATED:

Thank you all for the Twitter love and the comments. Here are a few things that surfaced:

Hugo’s partial aggregate

Hugo Kornelis suggested a partial aggregate, which technically isn’t non-blocking, but brings performance to about a similar level as my Don Quixote query. My interpretation of it:

WITH partialAgg AS (

SELECT AccountingDate, AccountID, SUM(Amount) AS Amount

FROM Finance.Transactions

GROUP BY AccountingDate, AccountID)

SELECT AccountID,

DATEFROMPARTS(

YEAR(AccountingDate),

MONTH(AccountingDate),

1) AS AccountingPeriod,

SUM(Amount) AS MonthlyChange

FROM partialAgg

GROUP BY AccountID,

YEAR(AccountingDate),

MONTH(AccountingDate);

This creates a two-step aggregation, where the first step creates a streaming (non-blocking) aggregate on (AccountingDate, AccountID), after which the relatively small output is hash aggregated to (year, month, AccountID).

Query statistics

Alex Friedman asked for the stats for the two queries.

On a DS4 v2 virtual machine (8 cores and not-sure-how-much-but-plenty of RAM) with SQL Server 2017 (build 14.0.3025.34), original query:

- Table ‘Transactions’. Scan count 9, logical reads 91185

- CPU time = 24032 ms, elapsed time = 3167 ms.

- Memory grant: 18 184 kB granted, 17 608 kB required

… and non-blocking query:

- Table ‘Transactions’. Scan count 9, logical reads 91701

- Table ‘Worktable’. Scan count 38080, logical reads 229006

- CPU time = 12796 ms, elapsed time = 1743 ms.

- Memory grant: 43 912 kB granted, 26 888 kB required

… and Hugo’s partial aggregate:

- Table ‘Transactions’. Scan count 9, logical reads 91711

- CPU time = 12343 ms, elapsed time = 1668 ms.

- Memory grant: 18 568 kB granted & required

To be honest, I was surprised to see such a memory grant (actually, any memory grant at all) on my non-blocking query, but as Hugo says in his comment, that’s probably the Spool.

On an Azure SQL Database, S0 tier, original query:

- Table ‘Transactions’. Scan count 1, logical reads 107675, physical reads 1, read-ahead reads 107673

- CPU time = 1657 ms, elapsed time = 219909 ms.

… and non-blocking:

- Table ‘Worktable’. Scan count 38080, logical reads 228999

- Table ‘Transactions’. Scan count 1, logical reads 107675, physical reads 0, read-ahead reads 107433

- CPU time = 1047 ms, elapsed time = 104359 ms.

… and Hugo’s partial aggregate:

- Table ‘Transactions’. Scan count 1, logical reads 107675, physical reads 0, read-ahead reads 105033

- CPU time = 1094 ms, elapsed time = 105316 ms.

Columnstore index

Finally, Uri asked what it would look like if we went full columnstore on the original query. I only ran this on the VM, as columnstore indexes are only supported on Premium Azure SQL Database instances:

- Table ‘Transactions_CCIX’. Scan count 8, logical reads 0, physical reads 0, read-ahead reads 0, lob logical reads 10715

- Table ‘Transactions_CCIX’. Segment reads 22, segment skipped 0.

- CPU time = 7126 ms, elapsed time = 1005 ms.

- Memory grant: 25544 kB granted, 24712 kB required.

It’s about twice as fast as the non-blocking rowstore query.

Hi , could we simple enable the batch mode and see what is going on?

I actually tried it, but it’s a whole different animal (including blocking operators), so I though it felt a bit off-topic for this post.

How about this approach? I see you have a second index on the table anyways…

ALTER TABLE Finance.Transactions ADD Yak AS 1000000 * CAST(AccountID AS BIGINT) + 100 * YEAR(AccountingDate) + MONTH(AccountingDate) PERSISTED;

GO

CREATE NONCLUSTERED INDEX IX_Transactions ON Finance.Transactions(Yak) INCLUDE(Amount);

GO

SELECT Yak / 1000000 AS AccountID,

DATEFROMPARTS((Yak % 1000000) / 100, Yak % 100, 1) AS AccountingMonth,

SUM(Amount) AS MonthlyTotal

FROM Finance.Transactions

GROUP BY Yak;

This approach would give a non-blocking stream aggregate on top of the index scan, as well as 2 scalar operators.

Haha, indexed computed columns is cheating! 🙂

Well… The AccountingDate column is not in the table create script, and the used index IX_ is unknown to us 😉

What is the statistics for my suggestion?

Will check when I get a few minutes!

Great post! A very interesting and creative use of local/global aggregation.

That being said, the method you use to compute the final aggregate from the partial aggregates is rather complex. It makes the query hard to understand and the execution plan you get is quite complex. Here is an alternative. (Using AdventureWorks for the demo, and I had to create an index to make the demo similar to your situation)

CREATE INDEX ix_demo

ON Sales.SalesOrderHeader (SalesPersonID,

OrderDate)

INCLUDE (SubTotal);

Original query:

SELECT SalesPersonID,

DATEFROMPARTS(YEAR(OrderDate), MONTH(OrderDate), 1) AS OrderPeriod,

SUM(SubTotal) AS MonthlySubTotal

FROM Sales.SalesOrderHeader

GROUP BY SalesPersonID,

YEAR(OrderDate),

MONTH(OrderDate);

Does a Hash Match (Aggregate) of all 31465 rows

New version:

WITH PartialAggregate

AS (SELECT SalesPersonID,

OrderDate,

SUM(SubTotal) AS DailySubTotal

FROM Sales.SalesOrderHeader

GROUP BY SalesPersonID,

OrderDate)

SELECT PartialAggregate.SalesPersonID,

DATEFROMPARTS(YEAR(PartialAggregate.OrderDate), MONTH(PartialAggregate.OrderDate), 1) AS OrderPeriod,

SUM(PartialAggregate.DailySubTotal) AS MonthlySubTotal

FROM PartialAggregate

GROUP BY PartialAggregate.SalesPersonID,

YEAR(PartialAggregate.OrderDate),

MONTH(PartialAggregate.OrderDate);

Unlike your version, this one is not entirely streaming. It does a Stream Aggregate on the original 31465 rows, but the resulting 1592 partial aggregates are then sorted (a blocking operator) before entering another Stream Aggregate.

If you really want 100% non-blocking, your solution is better. But you do pay a price – I see three Windows Spool operators, and these can (sometimes, depending on circumstances) become expensive.

Thanks! Yeah, I thought of a solution like this afer I published the post. Your method dramatically reduces the amount of data in the global aggregate (the blocking part).

I tried your suggestion, and it was indeed slightly faster than my idea. See the addendum to my post.

Thanks for the response and for the update to your post. Good job!

A useful idiom is a report period calendar. It gives a name to a range of dates. The worst way would be to use temporal math; it tells the world you do not think in sets or understand declarative programming yet. Here is a skeleton:

CREATE TABLE Report_Periods

(report_name CHAR(10) NOT NULL PRIMARY KEY,

report_start_date DATE NOT NULL,

report_end_date DATE NOT NULL,

CONSTRAINT date_ordering

CHECK (report_start_date 0)

etc);

These report periods can overlap; a fiscal quarter will be contained in the range of its fiscal year. I like the MySQL convention of using double zeroes for months and years, That is ‘yyyy-mm-00’ for a month within a year and ‘yyyy-00-00’ for the whole year. The advantage is that it will sort with the ISO-8601 data format required by Standard SQL. Remember that when you design report name column.

I fail to see how a calendar table is going to solve the blocking issue.

The hashing occurs because of the composite key.

Celko’s just being the usual SQL standard troll, I think

A rather simple query has become a very complex one. I am with Hugo on this, don’t write complex queries that others after you will be unable to maintain.

Adding a time dimension to the database is the most elegant way to do this.

Sure, but the point of the article was to demonstrate an efficient (i.e. non-blocking) pattern. I would typically agree with your approach for general-purpose applications, but blocking operators can be performance killers sometimes.

Elegance, maintainability and performance don’t always correlate. 🙂

“Elegance, maintainability and performance don’t always correlate. :)”

Does it, ever? 🙂

Yes, whenever Itzik does something.

Unfortunately, we don’t always have the luxury of being able to make schema changes to bad . . . erm . . . *vendor* designs.

Very interesting! Could you share the CPU and IO statistics, as well?

Thanks, I’ll check when I’m at my computer!

Hi! I’ve added the stats at the end of the article.

Excellent, thanks! That added some very interesting insights. Am I correct in understanding that the original query actually has the lowest memory grant of all the alternatives?

Actually, yes. To my surprise, I might add. I was sort of expecting the window spool to only generate a very small memory grant, but it turned out to be quite expensive.