I did some googling to see just how simple I could make a database deployment pipeline. I keep the DDL scripts in a git repository on the local network, but I can’t use Azure DevOps or any other cloud service, and I don’t have Visual Studio installed, so the traditional database project in SSDT that I know and love is unfortunately not an option for me.

So I googled a little, and here’s what I ended up doing.

Hold up

This post talks about making changes to production, which is the very goal of these types of tools. Nevertheless, don’t just copy-paste this code into your production environment. Always test every step. Especially if you found it on the Internet.

Now back to our regular programming.

What you’ll need

I’m using the following utilities and technologies in this post. All of them are cross-platform and free for the usage described here.

- git (open source)

- sqlpackage (Microsoft)

- sqlcmd (Microsoft)

- SQL Server Express Edition (Microsoft)

- PowerShell or PowerShell Core (Microsoft)

- Optional: spawn (Red Gate) as a replacement for a local SQL Server instance

The deploy process

I want to create an automation that puts whatever is in the “production” branch of my git repository into a production database. I’ve set up the following process on a build VM, but this feels like an ideal workload to run in a Docker container, if you have the time and inclination.

All the tools in this post are cross-platform, so you can run them on Windows, Linux or MacOS, with only minor variations in the code and setup.

Get the most recent code from git

I’m assuming you’ve already installed git and cloned the production branch of the repository to the build machine.

We need to pull the most recent changes from the origin:

git checkout production

git pull

We now have the latest database source code in the local directory on our build machine.

Create a build database

Our build database is a temporary SQL Server database in which we will build and test the schema from our repository.

I’m using a local SQL Server 2019 Developer Edition on the build server for this. Developer Edition is free, as long as you don’t do production work with it. I’m using sqlcmd to create a new, blank database that we will use as our temporary build database:

sqlcmd -S BUILDSERVER\SQL2019 -b -Q "CREATE DATABASE BuildDatabase;"

If you have Internet access, and don’t want to set up (and patch) your own build instance, check out Red Gate’s sweet, sweet Spawn.cc service, which lets you spin up a SQL Server container in the cloud. It’s completely free if you need less than 32 hours/month and no more than 2 GB of space.

spawnctl create data-container --name prod-build-environment --lifetime 1h

Populate the build database

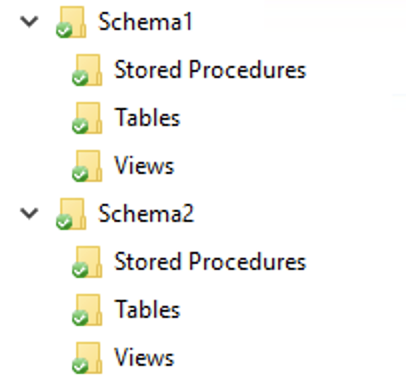

This is the part you’ll have to figure out yourself, depending on how you’ve stored your schema in the repository. In my case, I had all of the database objects organized as files in a folder hierarchy, so I’ll need to apply those scripts sequentially to the build database.

The tricky part is getting the dependencies right – you’ll want to create the tables before you add foreign key constraints, create the views, and so on. This step is also where you would do manual updates to the deploy code that wasn’t practical to do before you committed the code.

All of this means that what you do here will largely depend on the structure of your repository and the requirements of your particular database.

Here’s what I’ve done: I’m using a PowerShell script to compile a SQLCMD “runbook” script that I can execute using the sqlcmd utility.

function Generate-DeploymentRunbook(

[string]$path,

[string]$masterScriptFile

) {

# Collect a list of all .sql files, recursively:

$scripts = (Get-ChildItem -Path $path -Recurse | Where-Object { $_.Extension -eq ".sql" })

# CREATE SCHEMA:

$scripts | Where-Object { `

$_.DirectoryName -like "*\Schemas" -and `

$_.Name -notlike "db_*.sql" -and `

$_.Name -ne "INFORMATION_SCHEMA.sql" -and `

$_.Name -ne "guest.sql" -and `

$_.Name -ne "sys.sql" ` -and `

$_.Name -ne "dbo.sql" } `

| foreach { ':r `"'+$_.FullName+'`"' } `

| Out-File -FilePath $masterScriptFile # -Append

# CREATE TABLE

$scripts | Where-Object { $_.DirectoryName -like '*\Tables' ` } `

| foreach { ':r `"'+$_.FullName+'`"' } `

| Out-File -FilePath $masterScriptFile -Append

# Scalar & table-value functions

$scripts | Where-Object { $_.DirectoryName -like '*\*Functions' } `

| foreach { ':r `"'+$_.FullName+'`"' } `

| Out-File -FilePath $masterScriptFile -Append

# CREATE VIEW

$scripts | Where-Object { $_.DirectoryName -like '*\Views' `

| foreach { ':r `"'+$_.FullName+'`"' } } `

| Out-File -FilePath $masterScriptFile -Append

# CREATE PROCEDURE

$scripts | Where-Object { $_.DirectoryName -like '*\Procedures' } `

| foreach { ':r `"'+$_.FullName+'`"' } `

| Out-File -FilePath $masterScriptFile -Append

}

Generate-DeploymentRunbook `

-masterScriptFile "D:\Stuff\source\Deploy.sql" `

-path "D:\Stuff\DatabaseSchema"

Note: I’ve just hard-coded some object types in here. If you have other types of objects, like partition schemes & functions, synonyms, user-defined types, etc, you’ll need to include those in the script. I don’t just want to run every script in the repo in random order, because I need to be mindful of dependencies.

So think of this script as a rough template, rather than a complete, working script.

The “runbook” will look something like this:

:r "D:\Stuff\DatabaseSchema\dbo\Tables\Table1.sql"

:r "D:\Stuff\DatabaseSchema\dbo\Tables\Table2.sql"

:r "D:\Stuff\DatabaseSchema\dbo\Tables\Table3.sql"

:r "D:\Stuff\DatabaseSchema\dbo\Views\Overview.sql"

:r "D:\Stuff\DatabaseSchema\dbo\Stored Procedures\DoStuff.sql"

.. where the “:r” directive points to an existing .sql script to run.

To run this script, and deploy the database schema to the build database, we can now:

sqlcmd -S BUILDSERVER\SQL2019 -d BuildDatabase -b -i "D:\Stuff\source\Deploy.sql"

Note: The -b switch instructs sqlcmd to break if there’s an error somewhere along the way. This is extremely important, as deploying a half-built database to your target server will probably result in dropping existing objects that you weren’t supposed to drop.

Make a dacpac from the build database

Once the build database is complete, we’ll create a dacpac file. A dacpac is basically a standardized zip file with T-SQL scripts and a metadata model. If you’re on Windows, you can double-click it to extract it and take a look inside. Or you could rename it to a .zip file and extract the contents.

Our dacpac will contain the schema of the database. This will allow us to incrementally deploy changes to the target server in the next step, so we don’t need to know the current state of the target database and write change scripts ourselves. That’s what we came here for.

A very convenient way to create the dacpac file is with the the sqlpackage utility.

sqlpackage.exe

/Action:Extract

/SourceServerName:BUILDSERVER\SQL2019

/SourceDatabaseName:BuildDatabase

/TargetFile:D:\Work\ProductionDeploy.dacpac

/p:IgnorePermissions=True

/p:IgnoreUserLoginMappings=True

Note how I’ve used some extra property switches to prevent sqlpackage from scripting users and permissions. There are a few other switches for the Extract action that could be relevant for you.

Deploy the dacpac to the production database

Once you have the dacpac, it’s time to deploy it to the target database.

sqlpackage.exe

/Action:Publish

/SourceFile:D:\Work\ProductionDeploy.dacpac

/TargetServerName:PRODSERVER

/TargetDatabaseName:ProductionDatabase

/p:IgnoreColumnOrder=True

/p:BlockOnPossibleDataLoss=True

/p:DropPermissionsNotInSource=True

/p:DropRoleMembersNotInSource=True

/p:IgnoreFileSize=True

/p:IgnoreRoleMembership=True

/p:ScriptDatabaseOptions=False

As with the Extract action, the Publish action also comes with a selection of property switches that you should look into. Particularly, check out all those related to dropping things that are not part of your deploy.

Note: Carefully look through each and every one of the property switches and decide if it applies to your environment. Remember that your build database may be radically different in some aspects, and you don’t want to change those settings in the target database, nor do you want to accidentally drop objects that you didn’t intend to.

Here are some I would pay special attention to:

| BlockOnPossibleDataLoss | Kills the update if any data loss would occur (default=True) |

| DoNotDropObjectTypes | When DropObjectsNotInSource=True, these types (separated by semicolons) will not be dropped. |

| DropConstraintsNotInSource | |

| DropDmlTriggersNotInSource | |

| DropIndexesNotInSource | |

| DropObjectsNotInSource | |

| DropPermissionsNotInSource | |

| DropRoleMembersNotInSource | |

| ExcludeObjectTypes | Object types to be ignored (separated by semicolons) |

| GenerateSmartDefaults | Automatically create defaults for new, non-nullable columns on tables with existing rows |

| IgnoreColumnOrder | Setting this to True could prevent rebuilding tables when you add columns |

| IgnoreFileAndLogFilePath | Important: defaults to True to avoid changing the path of the data and log files. |

| IgnoreFileSize | Important: defaults to True to avoid resizing the database files. |

| IgnoreIndexOptions | Set this to False to make sure your indexes work the way you want them to. |

| IgnorePartitionSchemes | |

| IgnorePermissions | I would set this to True, and not make permission assignments in the repo. |

| IgnoreRoleMembership | I would set this to True, and not make role assignments in the repo. |

| ScriptDatabaseOptions | I’ve messed up more than one CI pipeline by allowing database changes in code. |

Use a publish profile

sqlpackage lets you use a “DAC Publish Profile” so that you can set and reuse a range of standard settings for your deploys. You could for instance use separate publish profiles for deploys to system test, acceptance test, and production, respectively.

Look for the /Profile: switch.

Dropping the build database

With the deploy completed, all that remains is to drop the local build database.

sqlcmd -S BUILDSERVER\SQL2019 -b -Q "ALTER DATABASE BuildDatabase SET SINGLE_USER WITH ROLLBACK IMMEDIATE; DROP DATABASE BuildDatabase;"

You might also want to delete or archive the DACPAC file.

Thanks

DevOps is definitely outside of my comfort zone. I was lucky to have DevOps legend and data platform MVP Alex Yates (b|t) help me out with some feedback on this post, but any mistakes that remain are still mine. Thanks, Alex!

Extra backtick?

$scripts | Where-Object { $_.DirectoryName -like ‘*\Tables’ ` } `

Missing close curly brace on first line, extra close curly brace on second line?

$scripts | Where-Object { $_.DirectoryName -like ‘*\Views’ `

| foreach { ‘:r `”‘+$_.FullName+’`”‘ } } `